Groke Technologies is paving the way for autonomous navigation solutions in the marine industry. On June 2, 2021, we hosted a webinar about building situational awareness for vessels with Groke Technologies, a Finnish marine company. With the help of Silo AI experts and the use of NVIDIA’s products, Groke is utilizing sensor fusion technologies to achieve better situational awareness of a vessel’s surroundings.

During the webinar we were joined by Juha Rokka, CEO, Groke Technologies; Asier Arranz, Developer Marketing and Oscar Guerra, Deep Learning Start-up Account Manager from NVIDIA. Silo AI was represented by Jesus Carabano Bravo, PhD, Senior AI Scientist and the discussion was hosted by Pauliina Alanen, Silo AI’s Communications & Marketing Lead.

Sensor fusion technologies are used to achieve situational awareness of a machine’s or vehicle’s surroundings. This awareness is built on various streams of data (sensing technologies) from the environment and it serves as a basis for autonomous navigation for vehicles and vessels. For a more profound review, read our latest technical article on sensor fusion, or a recent article about the basics of sensor fusion.

At the end of this recap, you can find the Q&A section from the webinar.

Improved vessel awareness with sensor fusion

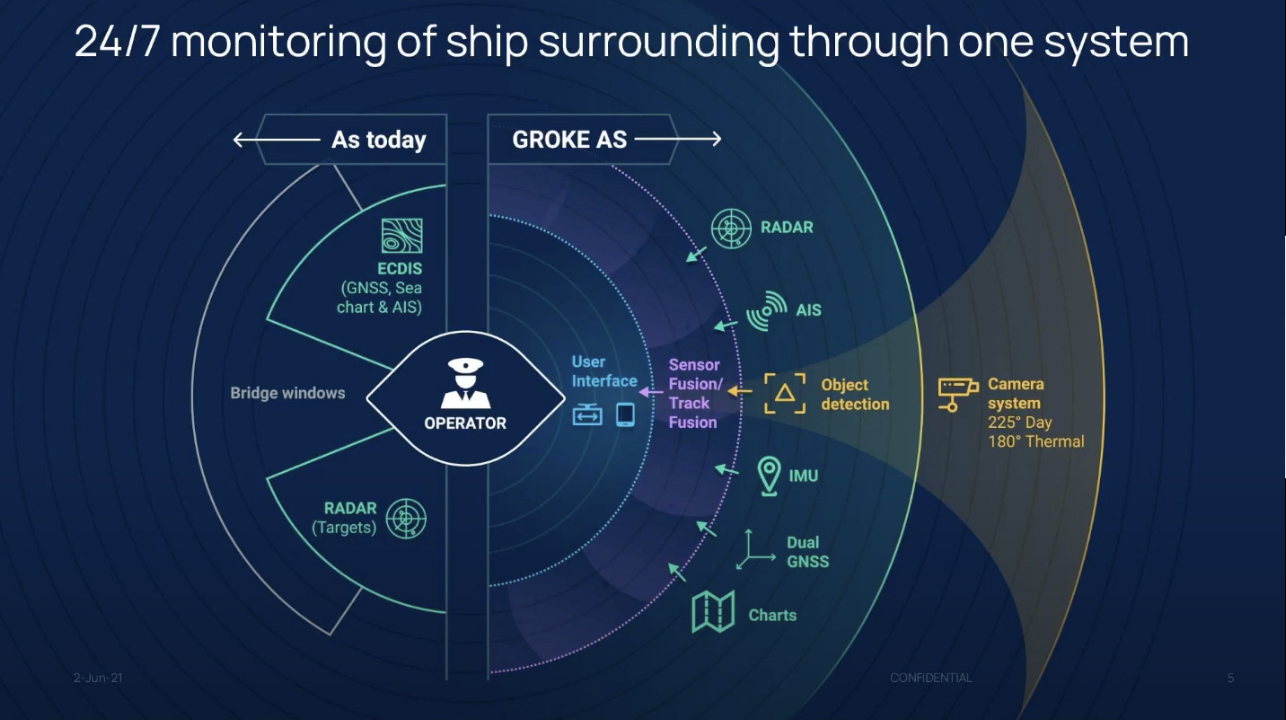

Today, ships and vessels navigate in often crowded waterways relying on traditional radar systems and ECDIS (Electronic Chart Display and Information System). ECDIS includes GNSS (Global Navigation Satellite System) sea charts and AIS (Automated Identification System). Their problem lies in detecting speed and accuracy. Even the slightest delay in the radar system means that operators can’t rely on information visible on the monitors. This forces them to rely on visually identifying other ships or hazards from bridge windows. In addition, the radar system can’t tell the type of the nearby vessels. The smaller and faster the vessels the greater the cognitive burden and pressure for operators. This in turn leads to increasing the risk whilst out at sea.

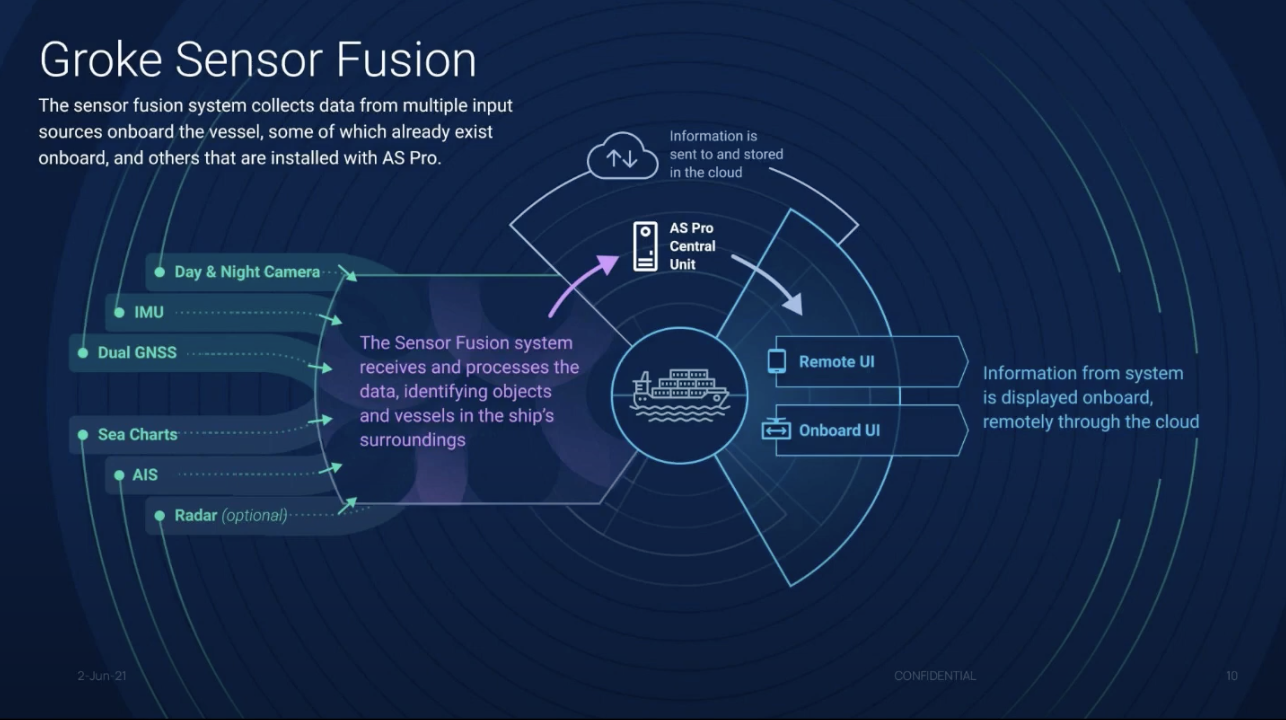

Together with our client Groke Technologies, we've developed an Awareness System (AS) that collects information from multiple sources. For visual confirmation, the system uses a wide field of view cameras with day and night vision (see Figure 1). Object detection happens by utilizing machine vision for day and thermal camera images. Additional information about other objects is received from radar and AIS. To identify your own vessel position and movements GNSS and Inertial Measurement Units (IMU) are used. Sea charts identify the vessel's geolocation position.

Groke’s forte lies in combining all the above-mentioned via sensor fusion and track fusion offering (see Figure 2). This offers both onshore and offshore users the most accurate and reliable understanding of their surroundings via a single view user interface. This results in reduced navigation stress and peace of mind during the operation in all kinds of conditions, regardless of vessel type.

Developer View – Sensor fusion in action

In the webinar, we heard from Jesus Carabano Bravo, Silo AI's Senior AI Scientist, who’s been working together with the team at Groke Technologies to improve the vessel’s situational awareness with sensor fusion.

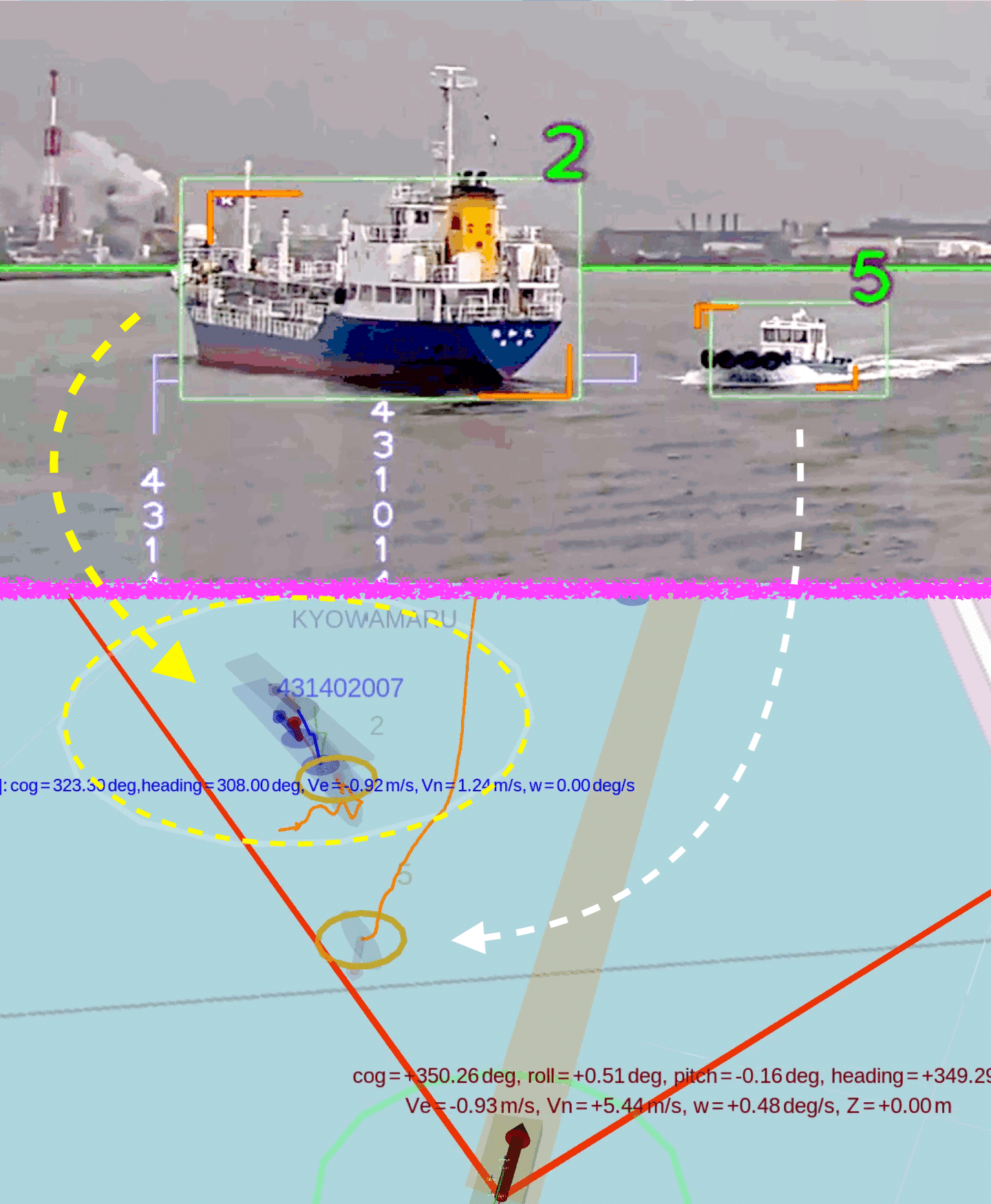

Sensor fusion is the probabilistic mix of data from multiple sources. Examples of sensors include cameras, radars, lidars, sonars, accelerometers, magnetometers, gyroscopes, altimeters, satellite navigation, and several others. In his talk, Jesus explains what sensors and sources of data he has used in his work at Groke and shows how this data is turned into an interpretation of data. This is illustrated with screenshots from Groke Technologies Developer UI in the images below. The Developer UI is something shown to technical people, the end-user navigating the vessel has a different, less noisy view. In case you want to see more videos in action, watch the entire webinar from here.

Jesus Carabano Bravo, PhD, Senior AI Scientist, Silo AI

Jesus Carabano Bravo is a machine learning expert with experience in computer vision, sensor fusion, and agile software development. He builds machine vision for autonomous vehicles, including projects for two of the largest companies in the automobile and maritime industries. Jesus has a PhD in high-performance computing, with a focus on image processing and parallel computing.

From the Developer UI in Figure 3, one can easily detect an operating vessel showing the exact location with coordinates in red print. The orange lines are displaying the camera's field of view. The yellow circles in turn are visual detections of the nearby vessels. The blue text is a real-time AIS of vessels with trajectory and velocity.

The green bounding boxes seen in the camera view of Figure 4. are recognized by neural networks running on NVIDIA’s GPU. These deep networks are trained with vast amounts of visual data in order to achieve human-level perception of maritime objects. Such visual perception and tracking capabilities are not found in today's commercial systems, and thus is one of the strong points of Groke's technology.

The optical flow seen in Figure 5. is used to measure the movement of the scene. Since the sensors are mounted on vessels that float, move, turn, and roll... the movement seem by the camera is a combination of the objects speed and the own vessel ego motion. This ego motion must be precisely estimated and removed to successfully track and predict the visual objects trajectories.

In Figure 6, the Awareness System (AS) is tracking vessels (2) and (5) accordingly. Vessel 2 size requires signals sent via AIS by law. The latter one (vessel 5) in turn is so small there are no AIS requirements for broadcasting its position and thus can easily be missed if relayed only on traditional tracking systems.

Another advantage of AS is the prediction it can give on the map about the future location of the vessel. This is achieved by applying Kalman filters to sensor fusion, allowing the prediction of possible crashing points with nearing vessels.

The orange lines in Figure 7. are from the camera view and are affected by the small shakes produced by the water. The blue lines are the information provided by the AIS. To successfully combine the two sources, track fusion communicates to the AS that the orange lines and the blue lines are the same vessel.

Groke's Awareness System puts all the information in one single UI, giving an accurate and reliable picture of nearby vessels’ location, speed, and trajectories. As mentioned above, the final users of the system enjoy a different -yet to be disclosed- view of the Awareness System, providing only relevant information to help navigate.

Watch the webinar

If you want to see the entire videos described above from the webinar, watch the webinar from here.

Q&A session from the webinar

Q. What are the main differences in situational awareness in the marine industry vs. automotive industry?

Jesus Carabano Bravo, Senior AI Scientist, Silo AI: First of all, a car needs to be able to react fast, within half a second, to prevent possible danger. In a vessel, you can likely be slower but you need to be very precise. With huge container ships, if you make the wrong decision then you cannot stop it anymore.

In a car, you have a fixed heading. Fixed heading means that where you look is likely the direction you will head toward. At sea, we are always drifting over the water that makes it more difficult to estimate where you're going. Additionally, constantly changing elevation (due to water bouncing the vessel up and down) and the fact that we're looking so far away makes understanding where an object is far away very difficult.

For a deeper dive into situational awareness for autonomous machinery, read our latest blog post.

Q. Have you solved the dilemma of fog since the awareness system is assisting in poor visibility situations?

Juha Rokka, CEO, Groke Technologies: Fog will be one of the most difficult conditions but, by using a thermal camera, we can improve visibility.

Q. Have you used virtual simulation environments to generate training data?

Juha Rokka, CEO, Groke Technologies: We are currently developing virtual simulations and plan to include all sensor simulations to generate training data. Otherwise, we have many systems onboard vessels to collect training data.

Q. Have you considered using stereographic, or multiple cameras to have more accurate distance estimation?

Juha Rokka, CEO, Groke Technologies: We have considered the option for stereo cameras and from a technology point of view, this is doable. Also using a PTZ camera as a binocular is possible.

Q. What do the heavy rain, snowy conditions, and other situations of reduced visibility affect AS?

Juha Rokka, CEO, Groke Technologies: Environmental impact is of course always there and will mostly affect the accuracy of the detection and distance. We are working on dynamic capability. This means the situation can be enhanced by constantly being aware of the specific conditions, the combination of the day, the thermal, the application of machine learning, and sensor fusion.

Q. For training algorithms, it would be important to have some sort of simulated environment to be able to run thousands of scenarios. We know from the car industry that there exist several different simulators with this purpose, but what about simulating vessels?

Juha Rokka, CEO, Groke Technologies: We have simulation tool development ongoing, as at present there are not many suitable simulation tools available out there.

Q. Do you use Lidar sensors?

Jesus Carabano Bravo, Senior AI Scientist, Silo AI: The problem with Lidar is that the cheaper automotive Lidar doesn't really have the reach (in distance) to be useful in maritime. There are some expensive Lidar solutions that do work in the maritime environment, but their costs are more than the cameras and all the sensors combined. So in that sense, it is difficult to justify their use here.

Would you like to work with a skilled AI Scientist like Jesus? Get in touch with our VP of Business Development Pertti Hannelin to find out how we could help at pertti.hannelin@silo.ai or via LinkedIn.

About

Join the 5000+ subscribers who read the Silo AI monthly newsletter to be among the first to hear about the latest insights, articles, podcast episodes, webinars, and more.

.png)

.png)